Artificial intelligence (AI) is often seen as a driver of innovation and competitiveness, with hundreds of billions of euros recently announced for investment in Europe and the United States. In the context of the climate emergency, many voices suggest that AI could optimize numerous processes and help reduce the environmental impact of our lifestyles. Conversely, other voices point to the enormous negative impacts of AI. With digital technology already accounting for 1.8% to 3.9% of global emissions in 2020, the massive construction of new data centers for AI is seen as an additional source of environmental destruction.

So, is AI an ally or a threat to the environment?

The answer isn’t simple or clear-cut; it heavily depends on how we use it and the types of AI involved. We’ll explore how AI can be an asset for the ecological transition, but also the significant impact of this technology, particularly in terms of greenhouse gas emissions, water consumption, mineral resources, and energy.

1. AI for Green: How AI Can Benefit the Environment

1.1 – Two Types of AI for the Environment

Broadly speaking, uses of artificial intelligence that benefit the environment can be grouped into two main categories. The first includes AI applications dedicated to optimizing physical systems: these aim to reduce waste, improve energy efficiency, or adjust resource consumption in real time. The second category involves AI used for producing or sharing knowledge, whether it’s modeling complex phenomena like climate or making scientific information accessible to a wider audience. For example, AI models are used to refine climate simulations, generate summaries that simplify environmental content for public understanding, or automatically detect illegal environmental destruction.

In this first section, we’ll focus on optimization applications. These are the ones that directly interact with infrastructure and hardware systems, and therefore can have a measurable and immediate impact on resource consumption and greenhouse gas emissions. This doesn’t mean that AI uses for knowledge production and sharing are secondary; their impacts on public discourse—though difficult to quantify—could prove equally decisive in the long term (one among many resources on the topic).

1.2 – AI for Optimization

One of the prime examples of AI-driven optimization’s contribution is in data centers. For instance, according to a 2016 article, AI in Google’s data centers can modulate cooling systems in real-time based on dozens of parameters, such as server load or outdoor temperature. This system reportedly led to an overall 15% reduction in the data center’s energy consumption. These fine adjustments, made possible by continuous data analysis, result in significant resource savings in this case.

This example illustrates a broader trend: artificial intelligence could make all production processes more efficient. By optimizing resource use, AI can reduce certain current losses. In the field of energy production, for example, the International Energy Agency (IEA), in its report AI and Energy (2025), estimates the effects of adopting AI-driven systems. The agency predicts that widespread AI adoption in the electricity sector could lead to up to $110 billion in annual savings by 2035. These savings would come from better power plant management, predictive maintenance, and more seamless integration of renewable energies into grids. The same report estimates that light industries like electronics or machine manufacturing could reduce their energy consumption by 8% by 2035 thanks to AI-driven optimization of production flows.

1.3 – A Limited Positive Impact

These IEA projections, from a leading institution in energy forecasting, confirm that artificial intelligence can play a notable role in improving energy efficiency. Furthermore, through similar optimizations, AI can help limit other negative impacts of human activities, such as water consumption or the release of pollutants into the environment.

However, these figures also show that these potential gains, while significant, remain too marginal. The 8% energy that could be saved in light industries needs to be put into perspective with the radical changes required to decarbonize our lifestyles. For example, France’s National Low-Carbon Strategy (2nd version) anticipates an 81% reduction in industrial emissions between 2015 and 2050 to achieve carbon neutrality. It’s clear that AI deployed alone isn’t enough; it can only be considered one part of the solution. Claims from some major tech figures that AI could solve climate change are currently unfounded.

1.4 – The Importance of Indirect Effects

We’ve discussed the direct, potentially beneficial effects of AI. However, these will also be accompanied by indirect effects that could be the most significant. One of the best-known phenomena in this area is the rebound effect, or, in its more severe forms, what’s known as Jevons’s Paradox.

This paradox was formulated by economist William Stanley Jevons. He observed that improving the efficiency of the steam engine didn’t lead to a decrease in coal consumption as might have been hoped. On the contrary, this technological improvement made the use of steam more economical and thus more attractive to industrialists. The result: steam engine use became widespread, and total coal consumption increased sharply. This paradox highlights a fundamental mechanism in energy economics: the increased efficiency of a technology can lead to its expansion, offsetting or even exceeding expected savings.

This reasoning applies perfectly to the efficiency gains allowed by AI. For example, if artificial intelligence reduces the energy consumption of an industrial or transport process, it’s plausible that this will reduce its operating costs, increase its profitability, and thus encourage intensified use or expansion into other areas. This phenomenon is a major counter-argument to enthusiastic projections about AI’s ecological potential (reference article on the subject).

Therefore, while AI can be a powerful optimization tool, it won’t automatically reduce our ecological footprint. Even before discussing its significant negative impacts, it’s essential to understand that AI is, at best, just one lever among others for achieving a sustainable lifestyle.

2. The Impact of AI: Not All AI is Created Equal

Before delving into the environmental impacts of AI in detail, it’s crucial to remember that there isn’t just one type of AI, but very different forms with varying environmental implications. In particular, it’s important to distinguish “traditional” machine learning from generative AI.

Machine learning has existed and developed significantly since the 1980s. It encompasses numerous statistical and computational methods (regression, decision trees, neural networks, etc.) that enable the detection of patterns in data, making predictions, or automated decisions based on learning from that data. These models are now ubiquitous in digital services: recommendation algorithms, search engines, spam detection systems, or industrial optimization tools. Their effectiveness often relies on large volumes of data, but their architecture remains relatively lean, and their energy costs—while real—are generally manageable, especially once the models are trained.

Generative artificial intelligence is a subcategory of machine learning that relies on specific neural networks capable of producing original content: text, images, sound, video, or even code. These models no longer just predict or classify based on data, but generate new content by learning from massive quantities of data. The turning point occurred between 2017–2018 with the emergence of models like GPT or BERT in academia. However, it’s primarily since 2022 that their use has become democratized to the general public with applications like ChatGPT, DALL·E, and Midjourney.

Generative AI developed later because it requires data and computational power on an unprecedented scale compared to traditional models. Training them mobilizes dedicated supercomputers for weeks, even months, and their large-scale use also generates continuous energy consumption, especially when integrated into consumer interfaces like ChatGPT. As we’ll see, this new family of AI is very resource-intensive, which raises questions about its compatibility with a responsible approach to digital solutions.

3. The Impact of Generative AI

3.1 – The Gap Between Generative and “Traditional” AI

To truly grasp the difference between various AI families, it’s instructive to compare the energy orders of magnitude related to their training. Take Dyno-v2, a non-generative computer vision model published by Meta in 2023. The graphics cards used to train this model consumed approximately 8.8 MWh (enough to power the average French household for about two years).

Computer vision is one of the most data- and energy-intensive non-generative tasks. Yet, Dyno-v2’s training consumed 2,000 times less energy than that of a current large language model. Indeed, training LLaMA 3.1, an equivalent to GPT-4 developed by Meta in 2024, required approximately 21 GWh to power the graphics cards* that trained it (roughly what a nuclear reactor produces in a single day).

*Only for graphics cards; this would need to add the consumption of other IT equipment (processor, motherboard, routers, storage, etc.), data center consumption (cooling, lighting, etc.), and account for the energy consumed during the manufacturing of this equipment.

3.2 – ChatGPT’s Carbon Impact

This explosion in computational power required by generative models isn’t limited to their training phase. In reality, the usage (inference) of these AIs can eventually consume as much, or even more, than training, especially when used on a very large scale. For example, ChatGPT currently has over 400 million weekly users, generating billions of interactions each month. Each of these requests mobilizes multiple graphics cards in a data center, sometimes for several seconds.

According to an estimate published by the French collective Écologits, a simple email generation with ChatGPT would consume approximately 14.9 Wh, nearly 50 times more than a Google search, which was estimated at 0.3 Wh in 2009. Even if these figures are only estimates (due to a lack of transparency from companies performing inference), the order of magnitude remains telling: a generative model likely consumes significantly more energy than a traditional search engine.

3.3 – Other Direct Impacts

The impacts aren’t limited to electricity. Intensive generative AI inference also leads to high water consumption, particularly for server cooling. One study estimated that approximately 500 mL of water are evaporated every 10 to 50 medium-sized queries made on GPT-3. This consumption, sometimes in regions already affected by water stress, represents a major local environmental concern.

Finally, the material footprint of these technologies is considerable. The manufacturing, transport, maintenance, and end-of-life of the necessary infrastructure (servers, graphics cards, storage systems, networks, etc.) generate significant impacts. Unfortunately, all AI players maintain great opacity regarding their operations, and hardware manufacturers are no exception. Thus, there is little information on the life cycle impact of AI-dedicated graphics cards. One can use the CSR reports of large companies to get an initial estimate. Meta and Google, for example, estimate that their data centers emit more CO2 in Scope 3 (indirect emissions) than in Scope 2 (energy-related emissions). In other words, if you evaluate the complete life cycle of the hardware, the energy used to operate it accounts for less than 50% of the CO2 emitted. We’ve seen that generative AI consumes an enormous amount of energy, but this observation reminds us that direct consumption is the most visible, but not necessarily the most significant. It is therefore essential to consider the widest possible range of indicators to have a complete view of AI’s environmental footprint.

3.4 – Beyond Water and Electricity

Analyzing CO2 emissions and water consumption is the easiest to do, but it should be supplemented by considering many other impacts: soil and groundwater pollution, biodiversity loss, conflicts over resource use, depletion of rare resources, etc. There are no precise figures on these impacts, and the extreme opacity of all actors in the sector makes knowledge production difficult.

More broadly, the environmental footprint of generative AI is only one part of its still poorly understood impact. A discussion about the social impacts of AI is outside the scope of this article, but we emphasize that it is essential to consider AI by taking into account all types of impact. Indeed, approaches that focus only on certain parts of the problem (only CO2, only environmental impact, etc.) carry a risk. They can lead to solutions that improve the studied indicators well but create new negative impacts in areas that haven’t been analyzed. For example, prohibiting a digital service for environmental reasons cannot be done without considering the social and economic impact of that service. We therefore invite you to explore the many resources available on other impacts of AI.

Regarding the environmental footprint, we can conclude that generative AI has a greater impact than most other digital services due to its increased need for computation, which leads to significant consumption of hardware, energy, and cooling.

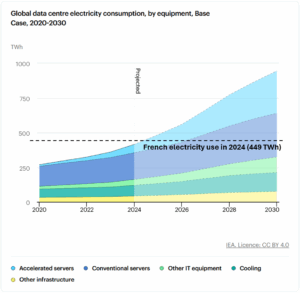

3.5 – Exploding Adoption

Despite these multiple impacts, generative AI is experiencing rapid growth, and numerous data centers are under construction or planned. The IEA predicts in April 2025 that global data center energy consumption could double by 2030 compared to 2024 and triple compared to 2020. This increase is primarily due to the rise of accelerated servers, i.e., servers specifically designed for generative AI. While digital technology currently accounts for several percent of the global carbon footprint, this significant growth and the impacts we’ve mentioned are clearly at odds with environmental preservation goals. It’s therefore becoming urgent to integrate the true cost of these technologies into discussions about their development.

Source: IAE – Energy and AI (2025)

4. The importance of impact-inclusive thinking

We’ve seen the potential of certain “green” AIs, as well as the environmental consequences of the massive adoption of AI (especially generative AI). So, ultimately, are we for or against AI? Will technology save the planet or signal the end of the Paris Agreement? Rather than taking a binary stance, it’s essential to adopt a nuanced perspective.

Artificial intelligence, in all its forms, is fundamentally an acceleration technology. It allows tasks to be automated, processes optimized, and results generated at unprecedented speed and scale. This capacity for acceleration, inherent in all AI systems—whether classic machine learning or generative models—is at the heart of its potential for environmental transformation… as well as its risks.

When applied to clear sobriety objectives (energy optimization, waste reduction, better resource allocation), AI—particularly in its classic form—can be a valuable technical ally for reducing the environmental impact of existing systems. As we’ve seen, these technologies can be relatively lean, and their targeted use allows for significant gains in industrial and digital infrastructures.

Conversely, when mobilized to indiscriminately accelerate the production of content, interactions, or digital services, as is often the case with generative AI, it can become a factor in intensifying environmental pressures. The automation of tasks once manual or limited can lead to a rapid increase in usage and thus a proportional increase in emissions, water consumption, and material needs.

In this context, it’s not about rejecting AI wholesale, but rather about asking the right questions:

- What is the expected environmental, economic or social benefit of adopting AI? Is this gain hypothetical, or has it already been rigorously demonstrated?

- Is this gain sufficient to justify the impacts of its development, training, deployment, and continuous use? Even if some impacts are not yet well understood?

- Does the acceleration induced by this AI lead to a net reduction in environmental pressures, or, conversely, to their increased diffusion?

These trade-offs demand a rigorous approach, based on the measurement, traceability, and transparency of environmental data. It’s no longer enough to offer promises of optimization; we must quantify them, compare them to real environmental costs, and integrate them into a global sustainability strategy.

It’s precisely within this logic that Sopht, a French startup specializing in measuring and decarbonizing information systems, operates.

Our conviction is clear: environmental performance must be driven by data, with the same level of rigor as financial performance. To achieve this, Sopht offers a modular platform that provides:

- A complete view of the IT value chain (multi-cloud, infrastructure, software, equipment, digital services).

- Automated measurement of GHG emissions and resource consumption.

- Most importantly, actionable recommendations aligned with CSR objectives and operational constraints.

At Sopht, we believe that the transition to sustainable digital practices cannot rely solely on compensation; we must tackle the root causes. This involves deeply understanding what IT consumes, what it saves, and prioritizing uses with a high net positive impact.

Author: Brice GAY,

In collaboration with Laurin Boujon and Tristan Coignion

And with the help of Gen AI (yes, we respect transparency)

Share this article on social media

Artificial intelligence (AI) is often seen as a driver of innovation and competitiveness, with hundreds of billions of euros recently announced for investment in Europe and the United States. In the context of the climate emergency, many voices suggest that AI could optimize numerous processes and help reduce the environmental impact of our lifestyles. Conversely, other voices point to the enormous negative impacts of AI. With digital technology already accounting for 1.8% to 3.9% of global emissions in 2020, the massive construction of new data centers for AI is seen as an additional source of environmental destruction.

So, is AI an ally or a threat to the environment?

The answer isn’t simple or clear-cut; it heavily depends on how we use it and the types of AI involved. We’ll explore how AI can be an asset for the ecological transition, but also the significant impact of this technology, particularly in terms of greenhouse gas emissions, water consumption, mineral resources, and energy.

1. AI for Green: How AI Can Benefit the Environment

1.1 – Two Types of AI for the Environment

Broadly speaking, uses of artificial intelligence that benefit the environment can be grouped into two main categories. The first includes AI applications dedicated to optimizing physical systems: these aim to reduce waste, improve energy efficiency, or adjust resource consumption in real time. The second category involves AI used for producing or sharing knowledge, whether it’s modeling complex phenomena like climate or making scientific information accessible to a wider audience. For example, AI models are used to refine climate simulations, generate summaries that simplify environmental content for public understanding, or automatically detect illegal environmental destruction.

In this first section, we’ll focus on optimization applications. These are the ones that directly interact with infrastructure and hardware systems, and therefore can have a measurable and immediate impact on resource consumption and greenhouse gas emissions. This doesn’t mean that AI uses for knowledge production and sharing are secondary; their impacts on public discourse—though difficult to quantify—could prove equally decisive in the long term (one among many resources on the topic).

1.2 – AI for Optimization

One of the prime examples of AI-driven optimization’s contribution is in data centers. For instance, according to a 2016 article, AI in Google’s data centers can modulate cooling systems in real-time based on dozens of parameters, such as server load or outdoor temperature. This system reportedly led to an overall 15% reduction in the data center’s energy consumption. These fine adjustments, made possible by continuous data analysis, result in significant resource savings in this case.

This example illustrates a broader trend: artificial intelligence could make all production processes more efficient. By optimizing resource use, AI can reduce certain current losses. In the field of energy production, for example, the International Energy Agency (IEA), in its report AI and Energy (2025), estimates the effects of adopting AI-driven systems. The agency predicts that widespread AI adoption in the electricity sector could lead to up to $110 billion in annual savings by 2035. These savings would come from better power plant management, predictive maintenance, and more seamless integration of renewable energies into grids. The same report estimates that light industries like electronics or machine manufacturing could reduce their energy consumption by 8% by 2035 thanks to AI-driven optimization of production flows.

1.3 – A Limited Positive Impact

These IEA projections, from a leading institution in energy forecasting, confirm that artificial intelligence can play a notable role in improving energy efficiency. Furthermore, through similar optimizations, AI can help limit other negative impacts of human activities, such as water consumption or the release of pollutants into the environment.

However, these figures also show that these potential gains, while significant, remain too marginal. The 8% energy that could be saved in light industries needs to be put into perspective with the radical changes required to decarbonize our lifestyles. For example, France’s National Low-Carbon Strategy (2nd version) anticipates an 81% reduction in industrial emissions between 2015 and 2050 to achieve carbon neutrality. It’s clear that AI deployed alone isn’t enough; it can only be considered one part of the solution. Claims from some major tech figures that AI could solve climate change are currently unfounded.

1.4 – The Importance of Indirect Effects

We’ve discussed the direct, potentially beneficial effects of AI. However, these will also be accompanied by indirect effects that could be the most significant. One of the best-known phenomena in this area is the rebound effect, or, in its more severe forms, what’s known as Jevons’s Paradox.

This paradox was formulated by economist William Stanley Jevons. He observed that improving the efficiency of the steam engine didn’t lead to a decrease in coal consumption as might have been hoped. On the contrary, this technological improvement made the use of steam more economical and thus more attractive to industrialists. The result: steam engine use became widespread, and total coal consumption increased sharply. This paradox highlights a fundamental mechanism in energy economics: the increased efficiency of a technology can lead to its expansion, offsetting or even exceeding expected savings.

This reasoning applies perfectly to the efficiency gains allowed by AI. For example, if artificial intelligence reduces the energy consumption of an industrial or transport process, it’s plausible that this will reduce its operating costs, increase its profitability, and thus encourage intensified use or expansion into other areas. This phenomenon is a major counter-argument to enthusiastic projections about AI’s ecological potential (reference article on the subject).

Therefore, while AI can be a powerful optimization tool, it won’t automatically reduce our ecological footprint. Even before discussing its significant negative impacts, it’s essential to understand that AI is, at best, just one lever among others for achieving a sustainable lifestyle.

2. The Impact of AI: Not All AI is Created Equal

Before delving into the environmental impacts of AI in detail, it’s crucial to remember that there isn’t just one type of AI, but very different forms with varying environmental implications. In particular, it’s important to distinguish “traditional” machine learning from generative AI.

Machine learning has existed and developed significantly since the 1980s. It encompasses numerous statistical and computational methods (regression, decision trees, neural networks, etc.) that enable the detection of patterns in data, making predictions, or automated decisions based on learning from that data. These models are now ubiquitous in digital services: recommendation algorithms, search engines, spam detection systems, or industrial optimization tools. Their effectiveness often relies on large volumes of data, but their architecture remains relatively lean, and their energy costs—while real—are generally manageable, especially once the models are trained.

Generative artificial intelligence is a subcategory of machine learning that relies on specific neural networks capable of producing original content: text, images, sound, video, or even code. These models no longer just predict or classify based on data, but generate new content by learning from massive quantities of data. The turning point occurred between 2017–2018 with the emergence of models like GPT or BERT in academia. However, it’s primarily since 2022 that their use has become democratized to the general public with applications like ChatGPT, DALL·E, and Midjourney.

Generative AI developed later because it requires data and computational power on an unprecedented scale compared to traditional models. Training them mobilizes dedicated supercomputers for weeks, even months, and their large-scale use also generates continuous energy consumption, especially when integrated into consumer interfaces like ChatGPT. As we’ll see, this new family of AI is very resource-intensive, which raises questions about its compatibility with a responsible approach to digital solutions.

3. The Impact of Generative AI

3.1 – The Gap Between Generative and “Traditional” AI

To truly grasp the difference between various AI families, it’s instructive to compare the energy orders of magnitude related to their training. Take Dyno-v2, a non-generative computer vision model published by Meta in 2023. The graphics cards used to train this model consumed approximately 8.8 MWh (enough to power the average French household for about two years).

Computer vision is one of the most data- and energy-intensive non-generative tasks. Yet, Dyno-v2’s training consumed 2,000 times less energy than that of a current large language model. Indeed, training LLaMA 3.1, an equivalent to GPT-4 developed by Meta in 2024, required approximately 21 GWh to power the graphics cards* that trained it (roughly what a nuclear reactor produces in a single day).

*Only for graphics cards; this would need to add the consumption of other IT equipment (processor, motherboard, routers, storage, etc.), data center consumption (cooling, lighting, etc.), and account for the energy consumed during the manufacturing of this equipment.

3.2 – ChatGPT’s Carbon Impact

This explosion in computational power required by generative models isn’t limited to their training phase. In reality, the usage (inference) of these AIs can eventually consume as much, or even more, than training, especially when used on a very large scale. For example, ChatGPT currently has over 400 million weekly users, generating billions of interactions each month. Each of these requests mobilizes multiple graphics cards in a data center, sometimes for several seconds.

According to an estimate published by the French collective Écologits, a simple email generation with ChatGPT would consume approximately 14.9 Wh, nearly 50 times more than a Google search, which was estimated at 0.3 Wh in 2009. Even if these figures are only estimates (due to a lack of transparency from companies performing inference), the order of magnitude remains telling: a generative model likely consumes significantly more energy than a traditional search engine.

3.3 – Other Direct Impacts

The impacts aren’t limited to electricity. Intensive generative AI inference also leads to high water consumption, particularly for server cooling. One study estimated that approximately 500 mL of water are evaporated every 10 to 50 medium-sized queries made on GPT-3. This consumption, sometimes in regions already affected by water stress, represents a major local environmental concern.

Finally, the material footprint of these technologies is considerable. The manufacturing, transport, maintenance, and end-of-life of the necessary infrastructure (servers, graphics cards, storage systems, networks, etc.) generate significant impacts. Unfortunately, all AI players maintain great opacity regarding their operations, and hardware manufacturers are no exception. Thus, there is little information on the life cycle impact of AI-dedicated graphics cards. One can use the CSR reports of large companies to get an initial estimate. Meta and Google, for example, estimate that their data centers emit more CO2 in Scope 3 (indirect emissions) than in Scope 2 (energy-related emissions). In other words, if you evaluate the complete life cycle of the hardware, the energy used to operate it accounts for less than 50% of the CO2 emitted. We’ve seen that generative AI consumes an enormous amount of energy, but this observation reminds us that direct consumption is the most visible, but not necessarily the most significant. It is therefore essential to consider the widest possible range of indicators to have a complete view of AI’s environmental footprint.

3.4 – Beyond Water and Electricity

Analyzing CO2 emissions and water consumption is the easiest to do, but it should be supplemented by considering many other impacts: soil and groundwater pollution, biodiversity loss, conflicts over resource use, depletion of rare resources, etc. There are no precise figures on these impacts, and the extreme opacity of all actors in the sector makes knowledge production difficult.

More broadly, the environmental footprint of generative AI is only one part of its still poorly understood impact. A discussion about the social impacts of AI is outside the scope of this article, but we emphasize that it is essential to consider AI by taking into account all types of impact. Indeed, approaches that focus only on certain parts of the problem (only CO2, only environmental impact, etc.) carry a risk. They can lead to solutions that improve the studied indicators well but create new negative impacts in areas that haven’t been analyzed. For example, prohibiting a digital service for environmental reasons cannot be done without considering the social and economic impact of that service. We therefore invite you to explore the many resources available on other impacts of AI.

Regarding the environmental footprint, we can conclude that generative AI has a greater impact than most other digital services due to its increased need for computation, which leads to significant consumption of hardware, energy, and cooling.

3.5 – Exploding Adoption

Despite these multiple impacts, generative AI is experiencing rapid growth, and numerous data centers are under construction or planned. The IEA predicts in April 2025 that global data center energy consumption could double by 2030 compared to 2024 and triple compared to 2020. This increase is primarily due to the rise of accelerated servers, i.e., servers specifically designed for generative AI. While digital technology currently accounts for several percent of the global carbon footprint, this significant growth and the impacts we’ve mentioned are clearly at odds with environmental preservation goals. It’s therefore becoming urgent to integrate the true cost of these technologies into discussions about their development.

Source: IAE – Energy and AI (2025)

4. The importance of impact-inclusive thinking

We’ve seen the potential of certain “green” AIs, as well as the environmental consequences of the massive adoption of AI (especially generative AI). So, ultimately, are we for or against AI? Will technology save the planet or signal the end of the Paris Agreement? Rather than taking a binary stance, it’s essential to adopt a nuanced perspective.

Artificial intelligence, in all its forms, is fundamentally an acceleration technology. It allows tasks to be automated, processes optimized, and results generated at unprecedented speed and scale. This capacity for acceleration, inherent in all AI systems—whether classic machine learning or generative models—is at the heart of its potential for environmental transformation… as well as its risks.

When applied to clear sobriety objectives (energy optimization, waste reduction, better resource allocation), AI—particularly in its classic form—can be a valuable technical ally for reducing the environmental impact of existing systems. As we’ve seen, these technologies can be relatively lean, and their targeted use allows for significant gains in industrial and digital infrastructures.

Conversely, when mobilized to indiscriminately accelerate the production of content, interactions, or digital services, as is often the case with generative AI, it can become a factor in intensifying environmental pressures. The automation of tasks once manual or limited can lead to a rapid increase in usage and thus a proportional increase in emissions, water consumption, and material needs.

In this context, it’s not about rejecting AI wholesale, but rather about asking the right questions:

- What is the expected environmental, economic or social benefit of adopting AI? Is this gain hypothetical, or has it already been rigorously demonstrated?

- Is this gain sufficient to justify the impacts of its development, training, deployment, and continuous use? Even if some impacts are not yet well understood?

- Does the acceleration induced by this AI lead to a net reduction in environmental pressures, or, conversely, to their increased diffusion?

These trade-offs demand a rigorous approach, based on the measurement, traceability, and transparency of environmental data. It’s no longer enough to offer promises of optimization; we must quantify them, compare them to real environmental costs, and integrate them into a global sustainability strategy.

It’s precisely within this logic that Sopht, a French startup specializing in measuring and decarbonizing information systems, operates.

Our conviction is clear: environmental performance must be driven by data, with the same level of rigor as financial performance. To achieve this, Sopht offers a modular platform that provides:

- A complete view of the IT value chain (multi-cloud, infrastructure, software, equipment, digital services).

- Automated measurement of GHG emissions and resource consumption.

- Most importantly, actionable recommendations aligned with CSR objectives and operational constraints.

At Sopht, we believe that the transition to sustainable digital practices cannot rely solely on compensation; we must tackle the root causes. This involves deeply understanding what IT consumes, what it saves, and prioritizing uses with a high net positive impact.

Author: Brice GAY,

In collaboration with Laurin Boujon and Tristan Coignion

And with the help of Gen AI (yes, we respect transparency)